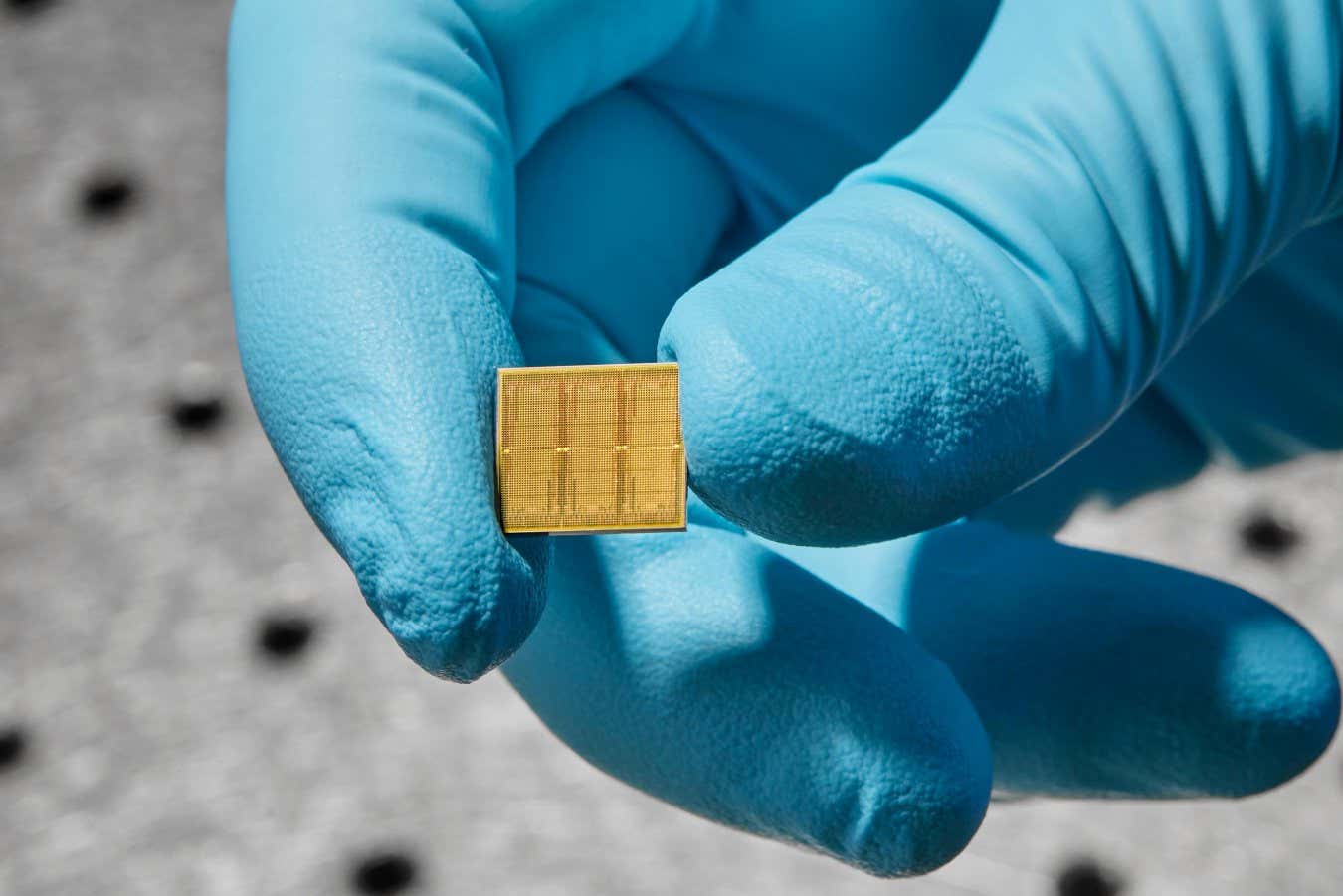

IBM Research has developed an analogue computer chip that can run artificial intelligence (AI) speech recognition models 14 times more efficiently than traditional chips. This breakthrough could address the growing energy consumption of AI research and the global shortage of digital chips typically used for AI applications. The analogue chip is designed to reduce bottlenecks in AI development.

There is currently a high demand for GPU chips, originally intended for gaming but commonly used for training and running AI models. However, supply struggles to keep up with demand. Additionally, studies show that the energy consumption of AI has increased 100-fold between 2012 and 2021, primarily supplied by fossil fuels. These factors suggest that the increasing scale of AI models may soon reach a point of stagnation.

One of the limitations of current AI hardware is the need for data to constantly travel between memory and processors, which leads to significant bottlenecks. To address this issue, IBM has developed the analogue compute-in-memory (CiM) chip. This chip performs calculations directly within its own memory, eliminating the need for frequent data transfers. It contains 35 million phase-change memory cells, similar to transistors in traditional computer chips, but with the ability to represent synaptic weights between artificial neurons. This enables the chip to efficiently store and process these weights without excessive data recall or storage operations.

In tests focused on speech recognition tasks, the analogue chip demonstrated an efficiency of 12.4 trillion operations per second per watt. This makes it up to 14 times more efficient than conventional processors. Despite its promising performance, Intel’s Hechen Wang suggests that the chip is still in its early stages of development. However, experiments have shown that it can effectively support commonly used forms of AI neural networks, such as Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). It also has the potential to support popular applications like ChatGPT.

While highly customized chips offer exceptional efficiency, Wang notes that they may not be suitable for all tasks, similar to how a GPU cannot perform all the functions of a CPU. However, if AI continues to progress as anticipated, highly customized chips like the analogue-AI chip could become more prevalent. Wang also highlights that the chip’s specialization does not limit it to speech recognition tasks. As long as CNN or RNN models are in use, the analogue chip retains its value and can potentially reduce costs compared to CPUs or GPUs due to its higher power and silicon usage efficiency.

In summary, replacing analogue chips with AI models, specifically the analogue compute-in-memory chip developed by IBM, offers a solution to the energy consumption challenges in AI research. This breakthrough not only improves efficiency but also helps overcome the shortage of digital chips in the market. While still in the early stages, the analogue chip shows promise in supporting various AI applications beyond speech recognition. With further advancements and adoption, highly customized chips could become more common in the AI landscape.