US President Joe Biden has issued an executive order on artificial intelligence (AI) to demonstrate leadership in regulating AI safety and security. While the order directs various government agencies to develop guidelines for testing and using AI systems, the implementation of these guidelines will ultimately rely on US lawmakers and the voluntary cooperation of tech companies.

The executive order specifically calls for the National Institute of Standards and Technology to establish benchmarks for “red team testing,” which involves probing AI systems for potential vulnerabilities before their public release. Sarah Kreps, an expert at Cornell University, suggests that the language and discussion around the order indicate a desire to be perceived as proactive in addressing AI regulation.

Experts note that the timing of Biden’s executive order coincides with the UK government’s AI summit. However, they caution that the order alone will not have a significant impact unless it is supported by bipartisan legislation and resources from the US Congress, which may be unlikely in an election year.

This order is part of a series of non-binding actions taken by the Biden administration on AI. For instance, last year, the administration released a blueprint for an AI Bill of Rights and has recently sought voluntary commitments from major AI development companies.

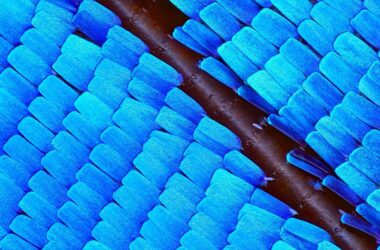

One significant aspect of the executive order relates to “foundation models,” referring to large AI models trained on extensive datasets. If these models pose a threat to national security, economic security, or public health and safety, companies developing such models are required to notify the federal government about their training process and share the results of safety testing.

Foundation models that could fall under this category include OpenAI’s GPT-3.5 and GPT-4, Google’s PaLM 2, and Stability AI’s Stable Diffusion model. The order would compel companies to disclose more information about the inner workings of their models, moving away from their traditionally secretive approach.

However, the order’s impact is contingent on how the government defines which foundation models pose a “serious risk.” Experts also question the qualifiers and ambiguities in the order’s wording, such as the lack of clarity on the definition of a “foundation model” and the criteria for identifying threats.

Compared to the European Union and China, the US currently lacks robust data protection laws that could support AI regulations. China has implemented targeted laws focusing on specific aspects of AI, such as generative AIs and facial recognition usage. In contrast, the European Union is working on a comprehensive approach that covers all aspects of AI, aiming to achieve political consensus among its member states.

While the US is a leader in AI development, it lacks concrete regulations in this field. However, it emphasizes the value of “AI with democratic values” and cooperation with allied countries.

Overall, Biden’s executive order on AI regulation highlights the administration’s intention to address AI safety and security. However, its effectiveness depends on further legislative support and additional clarity regarding the scope of regulation and potential risks associated with AI.