I have been active on the internet for over two decades, leaving behind a trail of blogs and social media posts during my teenage years. Now, as a journalist, I often write about social media, privacy, and artificial intelligence (AI). Recently, when ChatGPT, an AI chatbot, informed me that my previous outputs might have influenced its responses to other users’ prompts, I felt the need to erase my data from its memory.

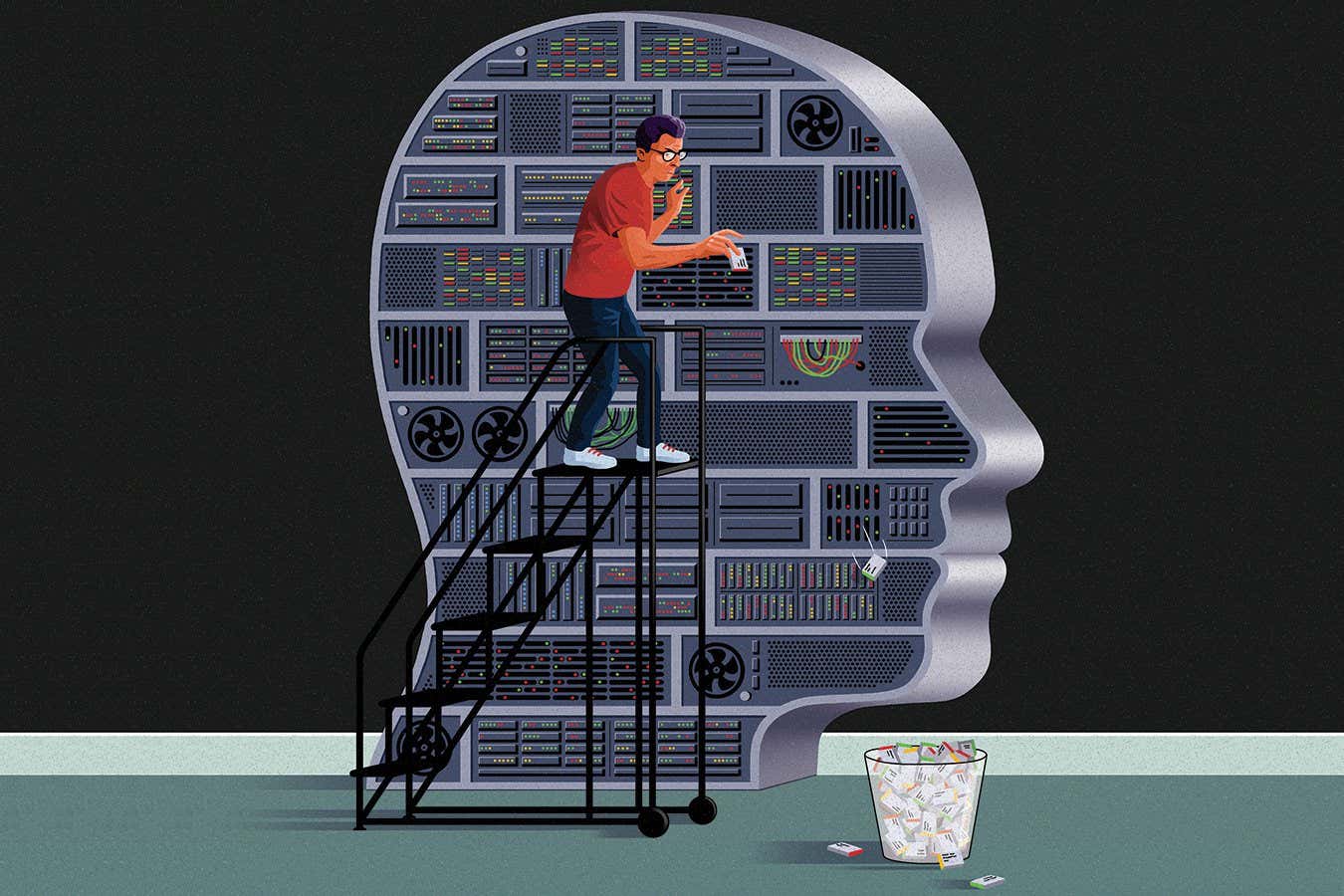

To my surprise, I discovered that there is no simple delete button. AI-powered chatbots, like ChatGPT, continuously learn from vast datasets that include numerous websites and online articles. This means they never forget what they have learned.

This lack of forgetting ability exposes AI chatbots like ChatGPT to potential risks of unintentionally disclosing sensitive personal information that might have appeared online. It also poses challenges for adhering to “right-to-be-forgotten” regulations, which require organizations to delete personal data upon request. Additionally, it opens the door for hackers to manipulate AI outputs by injecting false information or malicious instructions into the training data.

Consequently, many computer scientists are now striving to develop techniques for AI to forget. Although this task proves to be extremely difficult, solutions for “machine unlearning” are gradually emerging. Furthermore, this work holds significance beyond privacy concerns and combatting misinformation. If we aim to create AI systems that can truly learn and think like humans, designing them to forget might be a crucial engineering aspect.

The latest generation of AI-powered chatbots, including ChatGPT and Google’s Bard, rely on large language models (LLMs) as their foundation. These LLMs undergo extensive training on vast amounts of textual data to generate text-based responses to our input. However, this presents substantial challenges when it comes to privacy and security.

In conclusion, the increasing focus on privacy concerns in AI development has led to efforts to teach AI chatbots to forget. While this task is not easy, advancements in “machine unlearning” are starting to emerge. Addressing privacy and security concerns is not the sole purpose of this endeavor – it also plays a vital role in creating AI systems that can truly learn and think like humans.